Racism in tech

How biases become embedded in software and algorithms we increasingly rely on

“We think of AI in a way that science fiction has presented it to us,” said Jupiter Fka Imani, an activist and “hood strategist” who studied mechanical engineering and computer science at the University of Massachusetts. “I’ve been watching this show called NEXT, which just wrapped up, and it’s basically following this AI that is intentionally trying to eradicate humans.”

Though we don't know for sure what the future has in store, we can be sure AI will become only more integral to our day-to-day lives.

“Everyone’s scared of Google,” Imani said of the search giant’s use of AI, adding it’s not the AI we should be afraid of, but how it’s being used.

Deep learning, an AI function, mimics the way an organic brain sorts and processes information, which is a process of building artificial neural networks akin to how a brain’s nerve cells would collect signals from others. A deep neural network is "deep" because of its multiple layers that transform data and produce effective insights, allowing for speech and image recognition and natural language.

Imani referred to a video circulated online of a Black man who was trying to get soap in a Marriott restroom. A dispenser using near-infrared technology did not recognize his dark skin, but it could recognize the hand of a white man who was also there to demonstrate the technological setback.

The soap dispenser incident is a microcosm of a pressing issue in tech.

“The majority of people who were working on these databases, initiating and developing them, [are] white [and male],” said Imani.

They believe that AI should not be something to fear, but rather an opportunity for others to educate themselves and think more critically about the technology we’ve already grown accustomed to using daily. Technological systems receive input to perform and supposedly provide society with better outcomes. From manufacturing to traffic safety assistance, AI is very much beginning to make up part of the social fabric.

“The AI that we have is essentially taking information that we’ve given it in trying to make intelligent decisions that mimic humans, but they’re not thinking for themselves,” Imani said. “If you actually look at the science and the mechanics of it, we’re nowhere near able to develop an AI that could mimic humans [entirely] right now.”

Technology has been an incredible tool in many ways in fields of engineering, medicine, design, but also in the fight against racism.

Police body cam footage, surveillance tape, and bystander video all provided proof that George Floyd was murdered in Minneapolis while being arrested for allegedly attempting to pay with a counterfeit bill.

Social media algorithms and news aggregators showed articles and think pieces relating to the Black Lives Matter movement, highlighting the daily prejudices endured by Black people and other marginalized groups.

However, technology can also perpetuate the racism latent in our public education, employment, healthcare, policing systems, and other institutions.

The policing of Black lives

Montreal is no stranger to systemic violence. Sheffield Matthews was a 41-year-old father struggling with poverty, according to CTV. He was in distress and holding a knife on Oct. 29. That’s all it took, at an intersection in Notre-Dame-de-Grâce, for him to be shot by Montreal police after allegedly approaching their vehicle.

He was one of many Black men who have fallen prey to police brutality in NDG alone—others include Anthony Griffin in 1987 and Nicholas Gibbs in 2018, both of whom were killed by police.

Even though there is ample reason for racialized communities to distrust police, these institutions work with powerful databases and algorithms, with AI aiming to identify the potential for an individual to offend, according to a report from The Citizen Lab and the International Human Rights Program at the University of Toronto’s Faculty of Law.

Police stop data, gang databases, reports by members of the public, under-reported crimes, under-documented crimes, arrests, convictions, and guilty pleas all fall within the umbrella term “algorithmic policing.”

The Citizen Lab identified the use of algorithms by police services as typically for crimes that have not yet occurred, a pre-emptive monitoring and forecasting system that can supposedly predict potential crime.

Racialized and marginalized communities—including women—are less likely to report crime, due to unrecognized language or cultural barriers, fear of being deported and of law enforcement, or prior bad experiences with police and a mistrust of police institutions.

For individuals and communities susceptible to systematic mistreatment through criminal record checks, algorithmic policing only heightens police scrutiny and adds long-term negative effects to local health, safety, poverty, and human dignity, according to the report.

“We’re experiencing a ton of medical racism that impacts our treatment and our outcomes,” noted Imani, who is Black.

Racism in the Canadian healthcare system was fatal when Joyce Echaquan, a 37-year-old Atikamekw woman, filmed herself clearly in pain and being abused by healthcare workers on Facebook Live video last September.

Before AI can begin to assist doctors and clinicians in hospitals, they have to be trained to use it to make better clinical decisions, according to an article in Stroke and Vascular Neurology. Similar to algorithmic policing, hospitals also work with data and AI.

Data generated from clinical activities may include medical notes and electronic recordings from medical devices, physical examinations, and clinical laboratories and images.

“You get this data that’s biased,” said Imani. “That means white folks [who] have never even considered or engaged with us critically are now creating algorithms to figure out who should get care. Who should be—I am not going to say denied care but—unprioritized.

“So when, for example, you’re developing a machine-learning algorithm that’s going to decide when someone needs healthcare and when someone does not need healthcare, you have the biases inherent to our healthcare system creating data that’s already biased because Black folks typically don’t have access to quality healthcare,” they continued.

As of right now, police and hospital algorithmic data remain inaccurate, biased, and exclusionary.

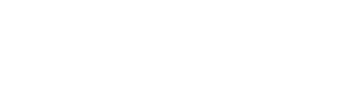

At the rate that facial-recognition technology is being incorporated into identifications, algorithms could potentially become a second pair of eyes for working analysts, according to the National Institute of Justice in the U.S. Facial-recognition software used by law enforcement compares suspects’ photos to mugshots or licence plate images, termed “one-to-one” matching.

One-to-one algorithms developed in the U.S. were all inaccurate in matching Asian, Black, and Indigenous faces, according to the U.S. National Institute of Standards and Technology. Indigenous people suffered the highest false-positive rates.

“Affect recognition,” a subset of facial recognition, will seek to do more by interpreting faces and detecting “micro-expressions,” matching them to “true feelings.” Not only are these scientific justifications highly questionable and most inaccurate out of other forms of identity tracking methods, but prolong a history of racist pseudoscience and malevolent practices, according to New York University’s AI Now Institute.

Its report outlines how faces could become tied to personal data. “Once identified, a face can be linked with other forms of personal records and identifiable data, such as credit score, social graph, or criminal record.”

Last June, Amazon decided to implement a one-year ban on police face recognition software as nation-wide protests surged across the country against racial inequality.

The problem has also surfaced in remote education. The Concordia Student Union received numerous reports that online proctoring systems discriminate against students with darker complexions, turning up "lack of brightness" error messages. It highlighted these concerns in an open letter demanding, in part, that the university cease using online proctoring software.

Lack of diversity in tech

Imani recalls a conference for tech companies they attended for an engineering class a couple of years ago. “As someone who looks at and really enjoys the kinds of production that these companies will put on, it’s amazing to see how they envision technology being incorporated into the lives of regular people,” they said.

However, Imani encountered only two Black people at the conference.

Many working for current big tech companies, such as Tesla, Facebook, Google, Amazon, Apple, Microsoft, are white and male, developing technologies that mirror their own biases. Their products can exclude or disregard people with darker complexions and of different ethnicities and backgrounds.

According to Bloomberg, only three per cent of workers in technical jobs at America’s eight largest tech companies are Black.

“[Google is] now facing this really intense backlash of how Black women are being treated, especially in these specific fields around data and AI,” said Imani. They were referring to the company’s recent ouster of a renowned AI-ethics researcher, Dr. Timnit Gebru, because of academic research she had co-authored highlighting the risks of large language models—key to Google’s business, according to MIT Technology Review.

“They are talking about going to Mars,” said Imani, referring to Elysium, a sci-fi film depicting a world where the wealthy live on board a luxury space station while the poor are left to fight and fend for themselves on Earth.

AI should be actively centred on community development and must avoid "recreating that whiteness and embedding it into the technology," said Imani. The goal, they said, should be to shift our entire social structure.

“Our job is to critically engage with the whiteness that created this system, currently."

This article originally appeared in The Influence/Influenced Issue, published January 13, 2021.